| |

Aurthors/المؤلفون

Abstract/الملخص

Keywords/الكلمات المفتاحية

Content/أقسام الملف

1- Introduction

2- The following methodology

2-1 Testing face detection algorithms

2-2 Perform facial recognition

3- Results and discussion

4- Conclusion and recommendations

5- References |

| Facial recognition system to support health facilities |

| |

| |

| ENG. Mohamad Saemeh1 ENG. Hasan khribiati 1 |

| Dr. Jomana Al-Deab2 Dr. Mohammed Daear3 |

1 Medical engineer in the faculty of medical engineering - Al Andalus University for Medical Sciences.

2 Assistant Professor at the Faculty of Mechanical and Electrical Engineering - Al-Baath University.

3 Ph.D. Lecturer at the Faculty of Mechanical and Electrical Engineering - Damascus University. |

| |

| |

|

|

Abstract

The research presents a program to serve health facilities. The idea came from the suffering of these facilities from several problems, such as the problem of receiving patients suffering from fainting or memory loss, with the need to know their data as soon as possible, to avoid errors that can occur in urgent cases or the occurrence of special cases of giving the wrong medicine to the patient from by the newly appointed doctors. The proposed solution was to create a computer program that solves these previous problems. The research aims to organize the patient's data in an electronic manner that facilitates the search process and can quickly identify the patient's face and give those concerned a detailed report on his condition, which avoids medical errors that may lead to the death of the patient., so a program that can be developed was considered, through designing a database to store all patient data in the Structured Query Language (SQL) to store data for each patient, and linking it to a graphical interface programmed in Python language to facilitate interaction by the user, also a facial recognition system was designed in MATLAB language. The research used artificial intelligence and deep learning techniques to clarify the idea of linking computer and informatics technologies to medical engineering, as medical engineering is an integral part of technological development in the world. |

|

|

|

| Keywords: health facilities, database, graphical interface, Python, MATLAB, deep learning |

|

| |

|

| |

|

| |

|

| |

|

| 1- Introduction |

|

Health facilities in general face many problems, whether in receiving new patients or extracting paper files for old patients and the time it takes, not to mention the possibility of damage due to storage in warehouses or data loss due to fire or the like. The existence of digital technologies today has made our lives easier, hence the idea of electronic linkage with health facilities,

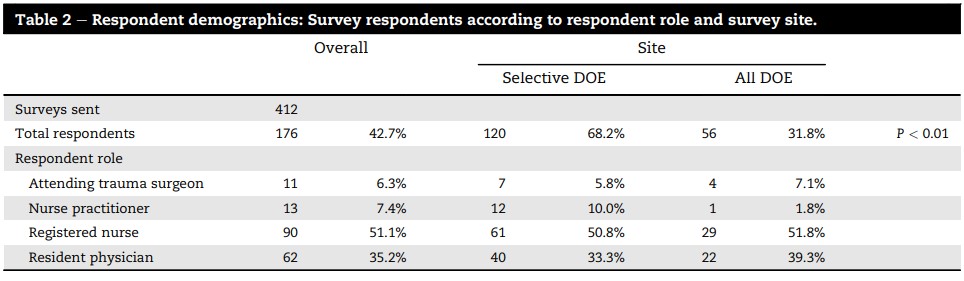

Where the statistics talk about the occurrence of confusion and loss when treating patients by nurses and doctors, where the unknown patients were divided into a selected sample and a comprehensive sample, as the percentage of the selected sample was (68.2%), and the comprehensive sample was (31.8%) [1]. |

|

| |

|

|

|

| Figure 1: the difference between the random sample and the selected sample and shows the percentages of cases. |

|

| |

|

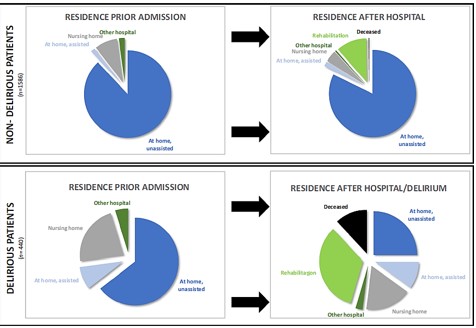

| Another comprehensive group study was prepared in 2022 on 2026 patients, as it showed the possibility of developing delirium (the inability to think logically) in special cases, and the study confirmed that 440 patients out of 2026, i.e. (21.7%), develop delirium. [2] |

|

| |

|

|

|

| Figure 2: Data on the development of delirium in patients. |

|

| |

|

A statistical article was published showing the cause and factors of medical errors committed by nurses in the emergency department: the statistics showed that 228 nurses had committed medical errors [3].

In this research, a system was created consisting of two parts, a database to save the patient information and a facial recognition system. The face recognition system started choosing the MATLAB environment as a suitable option for building the system, because of the techniques and tools it provides. It was an integrated, convenient, and simple environment for using deep learning (DL) techniques and creating a graphical user interface (GUI). Many techniques were used in the face detection process, and the aim was to increase the accuracy of detecting faces within the image or video in real-time, and several algorithms were used that will be explained later. In terms of face recognition, the proposed techniques were many, but Transfer Learning was the best technique, because of the effort and time it saves to build and train a neural network from scratch. Google's deep network (GoogleNet) has been pre-trained on more than 1 million images and can classify more than 1,000 different objects and objects. To build the application's GUI, the MATLAB environment Design App was used, which allows the user interface to be built using pre-programmed ready-made elements within the application, and allows the interface to be programmed from within without having to exit it. Regarding the construction of the second section of the research, which will be a database for storing and organizing patient data, it has been linked to a graphical interface to facilitate interaction by the user, Python language was the best choice because of the huge number of support libraries it provides, and because of the ease of use of Structured Query Language (SQL) To build the database, within the same Python environment, and the process of creating the second section of the system was done by specifying the data that the database will be built with, the partial medical database was built through Structured Query Language (SQL) to create the previous data and its tables before starting to link it With a graphical user interface (GUI), Microsoft SQL Server was used. In the last step, the user interface is built. Graphical (GUI) makes it easier for the user to modify and view data generated using Structured Query Language (SQL).

The GUI was built using the Tkinter library to consist of several windows, each with a specific function.

In 2020, a study was conducted, that proposed a deep tree-based model for automatic face recognition in a cloud environment.

Where the tree model is defined by the branching and height factors, then each branch of the tree is represented by components of a specific function in the form of sediments, which consist of (a convolutional layer, normalization and normalization package, and nonlinear function).

The proposed model was evaluated using several publicly available and open-source databases, and the performance was also compared with the latest deep facial recognition models.

Experiments were performed using three available face databases (FEI, ORL, LFW). The results of the experiments showed that the proposed model achieved an accuracy of approximately (99%) on each of the databases, and the information density of the single tree-based model was close to (60%), which is considered excellent for a deep model. Compared to existing depth models, the proposed models had similar accuracy.[4]

A study was conducted in September 2020, this paper proposes the idea of using facial recognition technology and advanced computer imaging technology to get rid of printed prescriptions and physical components such as (RFID) and recording files, so that the results obtained from the facial recognition system will be presented to the patient taking into account Several factors such as (incompetence, amount of time spent in reception, efforts of the medical staff in getting to know the patient, eliciting accurate details of the patient's medical history, previous visits to the specialist, and prescriptions).

The database associated with the patient's face image can also be published on a secure platform, where it can be updated from time to time and will be considered globally as the basis for the patient's identity and available to doctors in all approved medical centers for study and immediate action.

The system can be used in hospitals to track staff and patients more efficiently and operationally faster than the current record-based approach.

The performance of this model was compared with other similar works that were done in the past for accurate facial recognition so that the accuracy of the model reached (97.35%) on the (LFW) database. [5]

In the same year, a study was conducted aimed at developing an application that uses face recognition and cloud storage to facilitate simple knowledge transfer between medical practitioners and patients. This augmented reality application is specifically designed for the medical sector because it uses a combination of facial recognition, augmented reality, and cloud computing to store and display medical information for patients. Which is activated on a handheld device when an action is initiated.

The patient information system was designed using face recognition technology and cloud storage as a basis for building the proposed system.

The (Scrum) algorithm was used as the basis for developing the program, (JetBrains PyCharm) program and (Android Studio 3) program to build the application, (Google) smart glasses were used as a method for face recognition, and finally, the system was formulated based on six diagrams built in the Unified Modeling Language (UML). [6] |

|

| |

|

| 2. The following methodology |

|

| 1.2 Testing face detection algorithms |

|

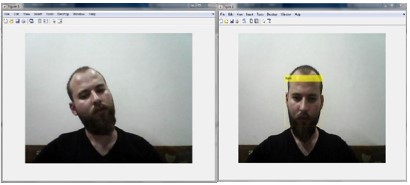

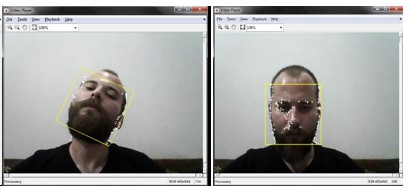

First, the Viola-Jones algorithm [7] was tested and verified for its ability to detect a human face in real-time.

However, as shown in Figure (3), it had a problem with its ability to detect when moving or tilting the face, we needed to keep the face in a stable state in front of the camera until it was detected, and this is a problem if we need to detect the face in unstable situations. |

|

| |

|

|

|

| Figure 3: Viola-Jones algorithm testing process. |

|

| |

|

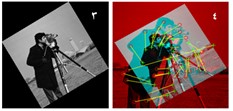

| In the second step, the Kanade-Lucas-Tomasi (KLT) [8] algorithm was tested, by studying its ability to track the change in a random image after selecting a set of distinct points. Figure (4) shows the steps used to test the algorithm. |

|

| |

|

|

|

|

|

| Figure 4: Using the KLT algorithm to track image changes, 1. Reading the image, 2. Assigning distinct points, 3. Making a change to the original image, 4. The result of applying the algorithm. |

|

| |

|

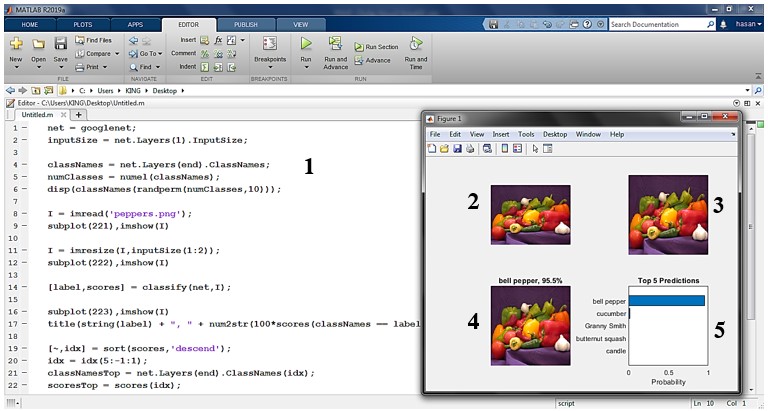

GoogleNet Test network was tested for its ability to classify. Figure (5) shows the testing process, which was carried out according to the following steps:

Reading the image, re-adjusting the dimensions of the image to match the size of the input layer in the network, classifying the image using the network, calculating the most important projections proposed by the network, and showing the result of the previous steps. |

|

| |

|

|

|

| Figure 5: Testing GoogleNet and showing the test result, 1. Code 2. Reading the image, 3. Adjusting the image size, 4. Recognizing the content of the image, 5. Showing network projections. |

|

| |

|

| 2.2 Perform facial recognition |

|

The modifications that were implemented for the face recognition program were on both the (Viola-Jones) algorithm to increase the accuracy of face detection and the (GoogleNet) [9] network to make it suitable for face recognition training.

Modify face detection algorithms

To increase the recognition accuracy in the (Viola-Jones) algorithm, and to eliminate the problem of not detecting faces during tilt or movement, (Eigenface) [10] and (KLT) were used to support the detection accuracy.

According to the following steps:

Detection of facial boundaries and characteristic features using minimum eigenvectors algorithm (EigenVectors) and eigenvalues (EigenValues), the detected features and boundaries were identified using clear points, also the points in the video were tracked using the Kanade-Lucas-Tomasi (KLT) algorithm, and finally the transformation estimation Geometry from matching pairs of points.

Modify the network (GoogleNet):

The aim of making modifications to the structure of the network was to make it reusable as a trainable tool for face recognition, and the modification process was carried out according to the following steps:

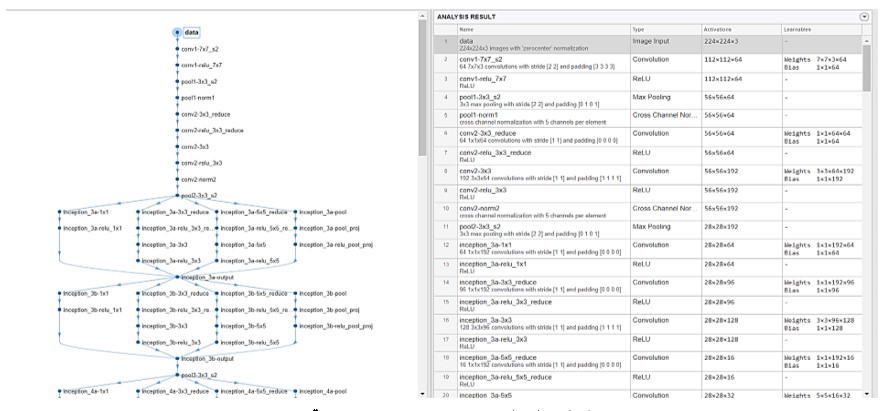

The network was loaded by importing the model of the network into the MATLAB environment. Figure (6) shows how to display an interactive visualization of the network structure and detailed information about the network layers using the (AnalysNetwork) instruction, within the MATLAB environment. |

|

| |

|

|

|

| Figure 6: GoogleNet layers within the MATLAB environment |

|

| |

|

The last 142 learnable layers (Loss3-Classifier) and the final classifier (Output) in the network model (GoogleNet) contain information about how the features extracted by the network are combined into class probabilities, loss values, and predicted labels. The convolutional layers of the network extract image features used by these two layers to classify the input image, where:

The last learnable layer (Loss3-Classifier): is the class responsible for learning the features of a specific task, and the final classifier (Output): is a layer with learnable weights and is a fully connected layer.

Finally, direct programming in the MATLAB environment will be used to make appropriate modifications, where:

The last learnable layer (Loss3-Classifier) and the final classifier (Output) will be replaced with a new layer that adapts to the new dataset.

The final, fully connected Classification layer (Output) will be replaced by a new fully connected layer with several outputs equal to the number of classes in the new dataset.

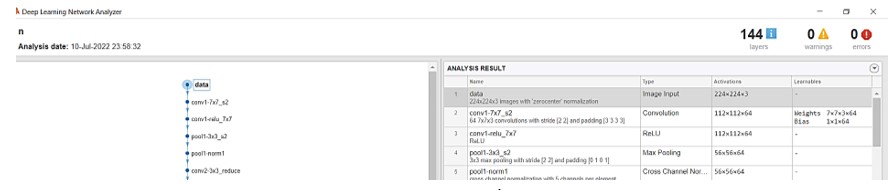

To verify that the network is ready for training after implementing the modifications, we analyze the network using the (AnalysNetwork) command within the MATLAB environment and display the interactive visualization of the structure of the new network, to ensure that the modifications have been implemented, so that if the (Deep Learning Network Analyzer) tool reports that there are no errors, the modified network will be ready for training.

In the next step, the face samples that will be used in the training process will be prepared and the network settings will be adjusted before starting the training process.

As Figure (7) shows, the first element in the network layers is the image input layer. This layer requires the input of size images

(224 x 224 x 3), where 3 is the number of color channels. |

|

| |

|

|

|

| Figure 7: Specifications and dimensions of the input layer in the (GoogleNet) network. |

|

| |

|

Therefore, the software architecture will be configured to resize the images before they can be used to train the network.

In the next step, the training settings will be set

By training the network with the default settings

The training settings were modified in several stages according to the following: Setting the Initial Learn rate to a small value to slow down learning in the transferred layers, Determining the Validation Frequency so that the accuracy of the validation data is calculated once in each period

Select a small number of epochs (Epoch)

Define the Mini-Batch Size to divide the number of training samples evenly.

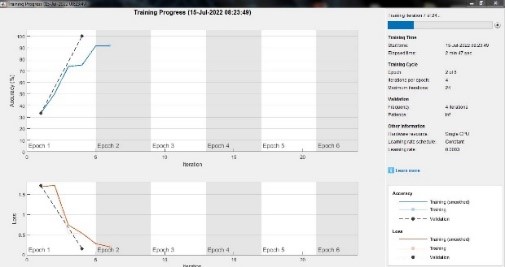

After examining the training process using the initial settings and tracking them, the training settings were reset to make the training process reach the best accuracy with the lowest error rate, due to the lack of benefit in increasing the training rate after the model reached the greatest possible accuracy and the lowest possible error, and Figure (8) shows The process of training the network using the new settings. |

|

| |

|

|

|

| Figure 8: progresses the network training process. |

|

| |

|

The settings were set as follows:

Initial learning rate = 00743.0

Verification Frequency = (Reinforcement Training Pictures)/(Batch Thumbnail Size)

epochs = 6.

Mini batch size = 5.

In the penultimate step, the verification images will be classified using the fine network and the accuracy of the classification will be calculated. This will be done by creating a software follower to test the network after the training process.

A probabilistic estimate of who is in front of the camera will be displayed so that convolutional operations will be applied to each frame of the camera independently.

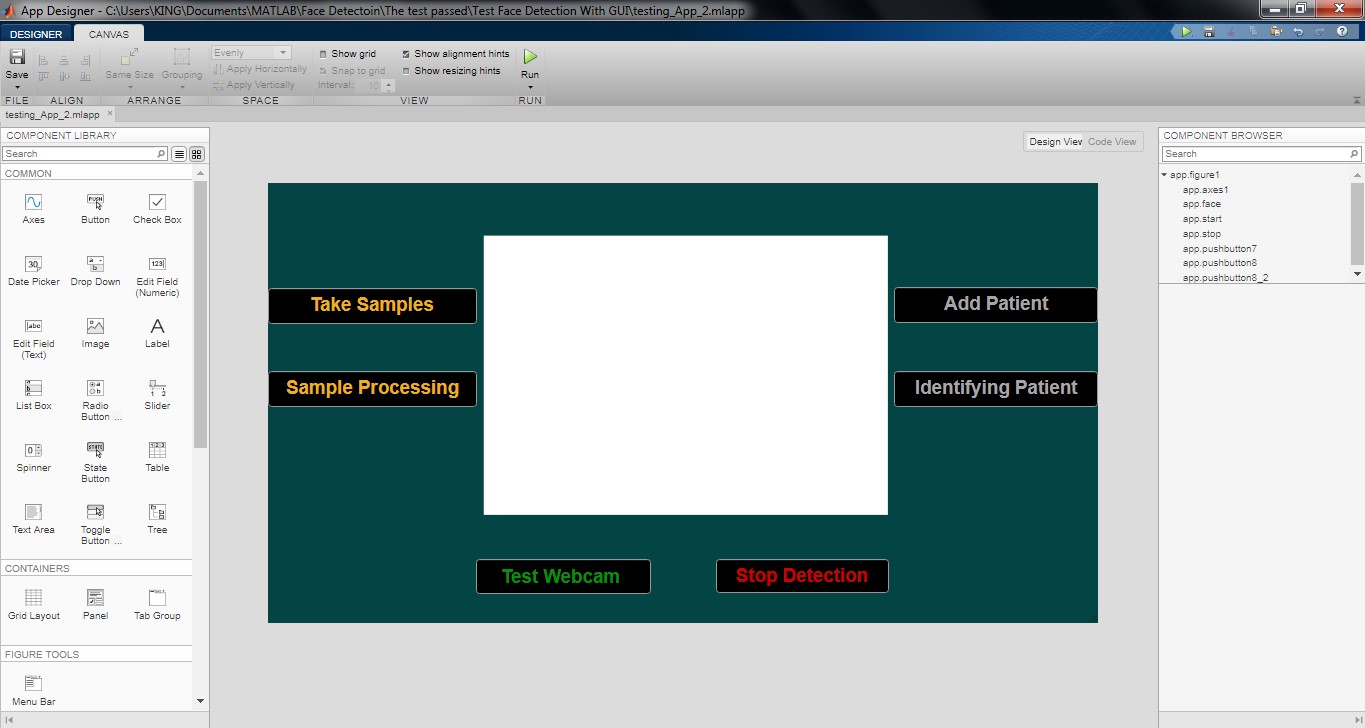

In the last step in the first section of our research, a graphical user interface (GUI) will be built that combines the components of the facial recognition system that have been recognized previously, and the goal of the interface will be to facilitate dealing with the system by the user, and this will be done by using the Design App located within Matlab environment, and Figure (9) shows the process of building the initial version of the interface so that the interface will provide the ability to:

Identifying individuals, if they were previously defined in the system, and adding new people to the system in an easy way, by:

The ability to detect the face and take samples of the person's face automatically, prepare the samples and prepare them for the system training process, and start the training process after giving the add command. |

|

| |

|

|

|

| Figure 9: The process of building a GUI |

|

| |

|

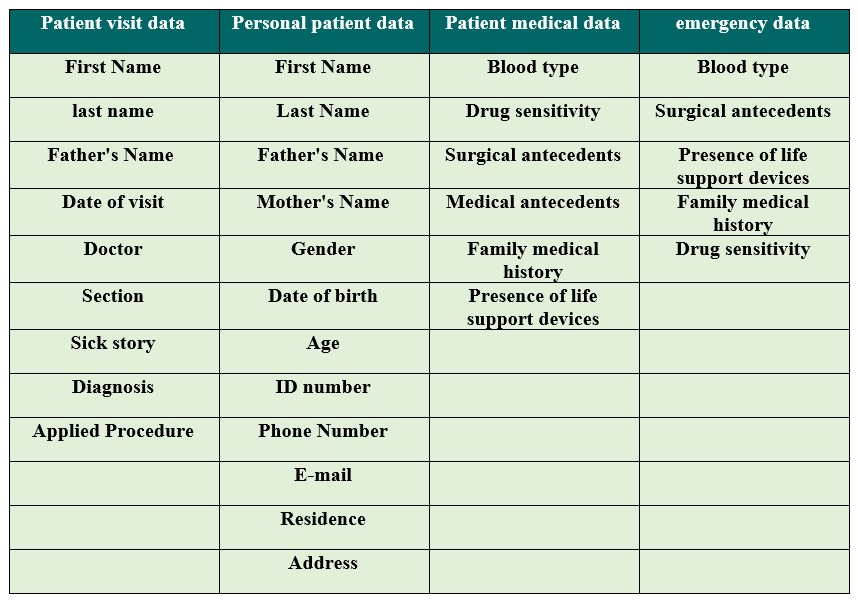

About building the second section of the research, which will be a database for storing and organizing patient data, and linking it to a graphical interface to facilitate interaction by the user, the Python language was the best option because of the huge number of supportive libraries it provides, and because of the ease of use of the Structured Query Language (SQL) to build the database, within the same Python environment, and the process of creating the second part of the system was carried out according to the following:

In the first step, the data that will be used to build the database was determined, and the data was divided into several categories. Figure (10) shows the data included in each of the previous sections, and the categories were distributed as follows:

Patient personal data, patient medical data, patient visits to the health facility, and emergency data. |

|

| |

|

|

|

| Figure 10: Data type in the database sections. |

|

| |

|

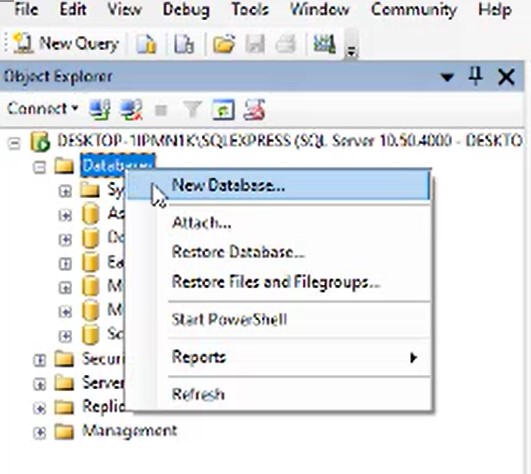

| In the second step, the database was built where the Structured Query Language (SQL) was used to create the previous data and its tables before starting to link it to the graphical user interface (GUI). Microsoft SQL Server was used. The program was entered. and create a new database by pressing the (New Database) button as in Figure (11). |

|

| |

|

|

|

| Figure 11: Creating a new database |

|

| |

|

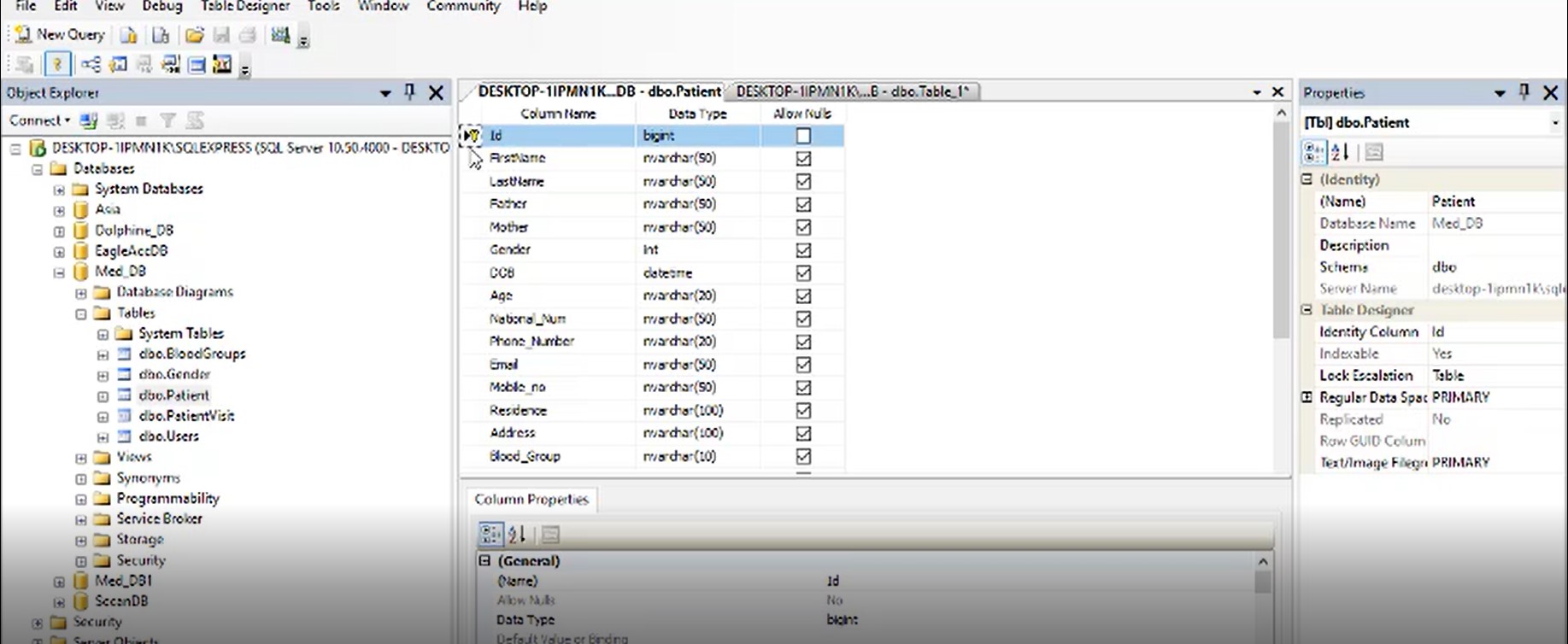

| After entering the databases, tables for patients are created and the name of the field is determined, and its nature (nominal variable, numeric variable, in the form of a foreign key, .......) as in Figure (12). |

|

| |

|

|

|

| Figure 12: A database within Microsoft SQL Server. |

|

| |

|

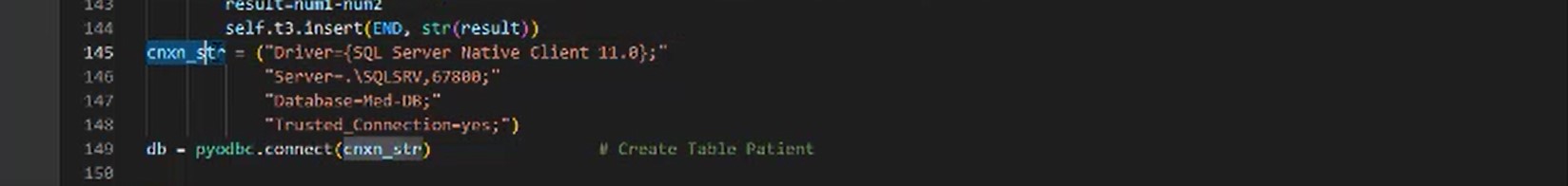

| After completing the creation of the tables, the database is linked in the Python language through code that includes connection information, and a set of parameters, the first parameter is the server version, its name, and the name of the database, which enables the program to complete the linking process and confirm the reliable connection process as in the figure (13). |

|

| |

|

|

|

| Figure 13: The code to connect the graphical interface to the database. |

|

| |

|

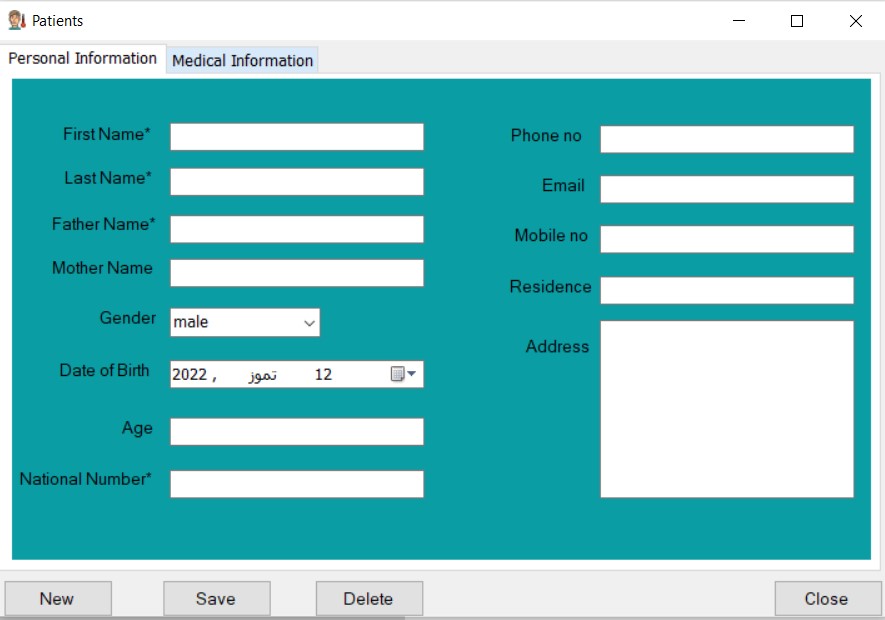

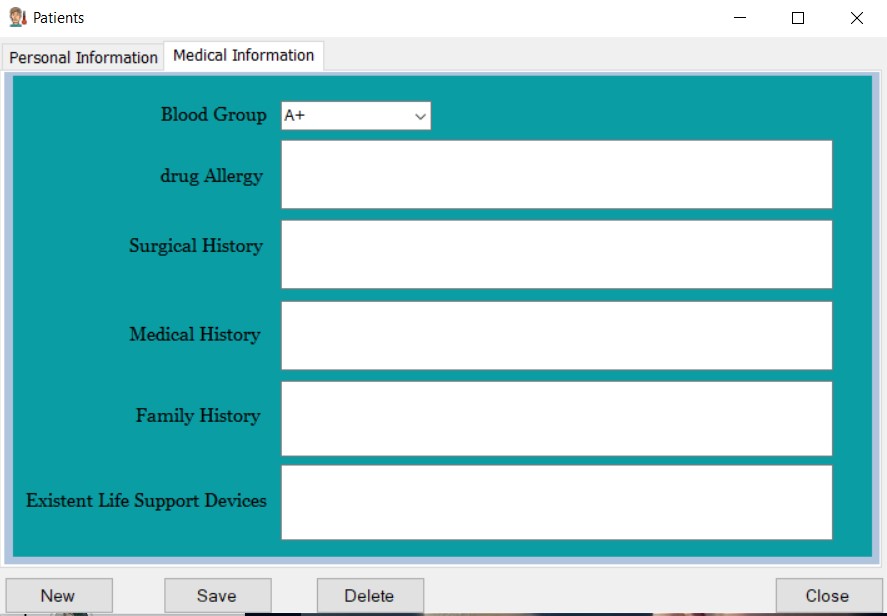

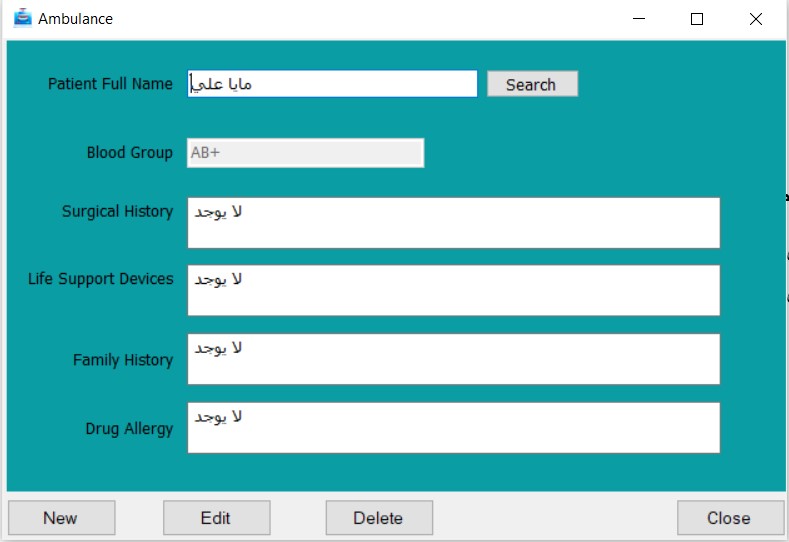

In the last step, a graphical user interface (GUI) was built that will make it easier for the user to modify and view the data that was generated using Structured Query Language (SQL).

The graphical user interface was built using the (Tkinter) library to consist of several windows, each with a specific function, according to the following:

Security window: It was created to ensure the security and privacy of patient data so that the user needs to enter the correct name and password to allow him to access the rest of the windows.

Information entry windows: Their purpose is to allow the user to modify and add new data to the database, and there will be an entry window for each category of data.

Inquiry windows: It aims to allow the user to view patient data previously stored in the database, and there will be a display interface for each category of data, and it will allow inquiring about the patient with more than one option, such as (name, age, blood group, national number).

Emergency window: It is also a window for displaying information, but it is responsible for displaying sensitive medical data of the patient independently, and it is used in emergencies to avoid possible medical errors. |

|

| |

|

| 3. Results and discussion |

|

First: Results.

About the results of the research, in the section on the facial recognition system, after enhancing the (Viola-Jones) algorithm with both (Eigenface) and (KLT) algorithms, the accuracy of the system was increased in tracking and detecting the face while it was in front of the camera, and Figure (11) shows us that. |

|

| |

|

|

|

| Figure 14 The results of modifying the face detection algorithm. |

|

| |

|

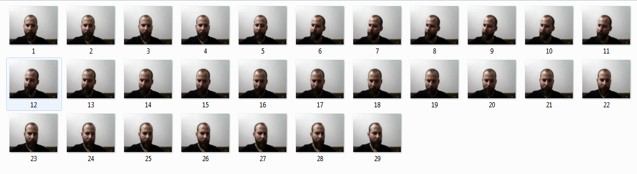

| Regarding face recognition, the ability of the software affiliate responsible for detecting the face in front of the camera and taking samples was confirmed. Figure (15) shows samples taken using it. |

|

| |

|

|

|

| Figure 15: Samples taken automatically using the software function. |

|

| |

|

| Then make sure that the program function responsible for cutting the face area only works from the captured images. Figure (16) shows the results of applying the function. |

|

| |

|

|

|

| Figure 16: Images of the face area excised from the original images. |

|

| |

|

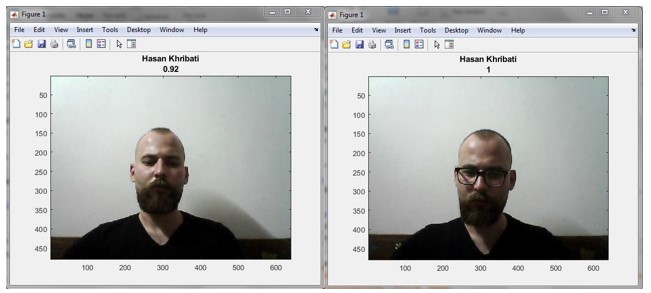

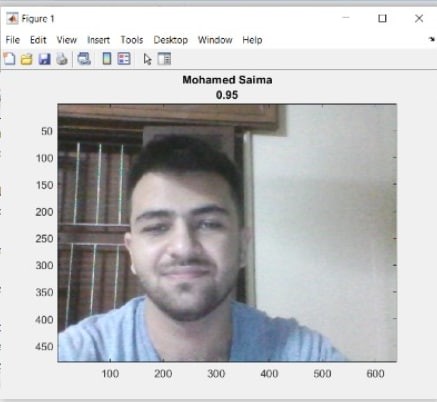

| After completing the training of the modified network model, it was confirmed that it can recognize the faces in front of the camera, with a display of the guess ratio of the prediction presented by the system, as shown in Figure (17). |

|

| |

|

|

|

|

|

| Figure 17: The process of ensuring the ability of the network to recognize different faces. |

|

| |

|

| Figure (18) shows us how to build a graphical user interface for the face recognition system, which includes several buttons that allow: turning on face detection, starting face sampling, preparing the captured samples, adding a new patient, turning on face recognition, and instructions to guide the user. |

|

| |

|

|

|

| Figure 18: The user interface of the facial recognition system. |

|

| |

|

About the other part of the research on the medical database, programming was done in the structured query language according to the following.

The security interface is shown in Figure (19), which requires entering the user's name and password to allow access to the database. |

|

| |

|

|

|

| Figure 19: The security interface of the medical database. |

|

| |

|

| The interface of the medical database is shown in Figure (20), which includes three buttons that move the user to other windows of the database. |

|

| |

|

|

|

| Figure 20: The main database interface. |

|

| |

|

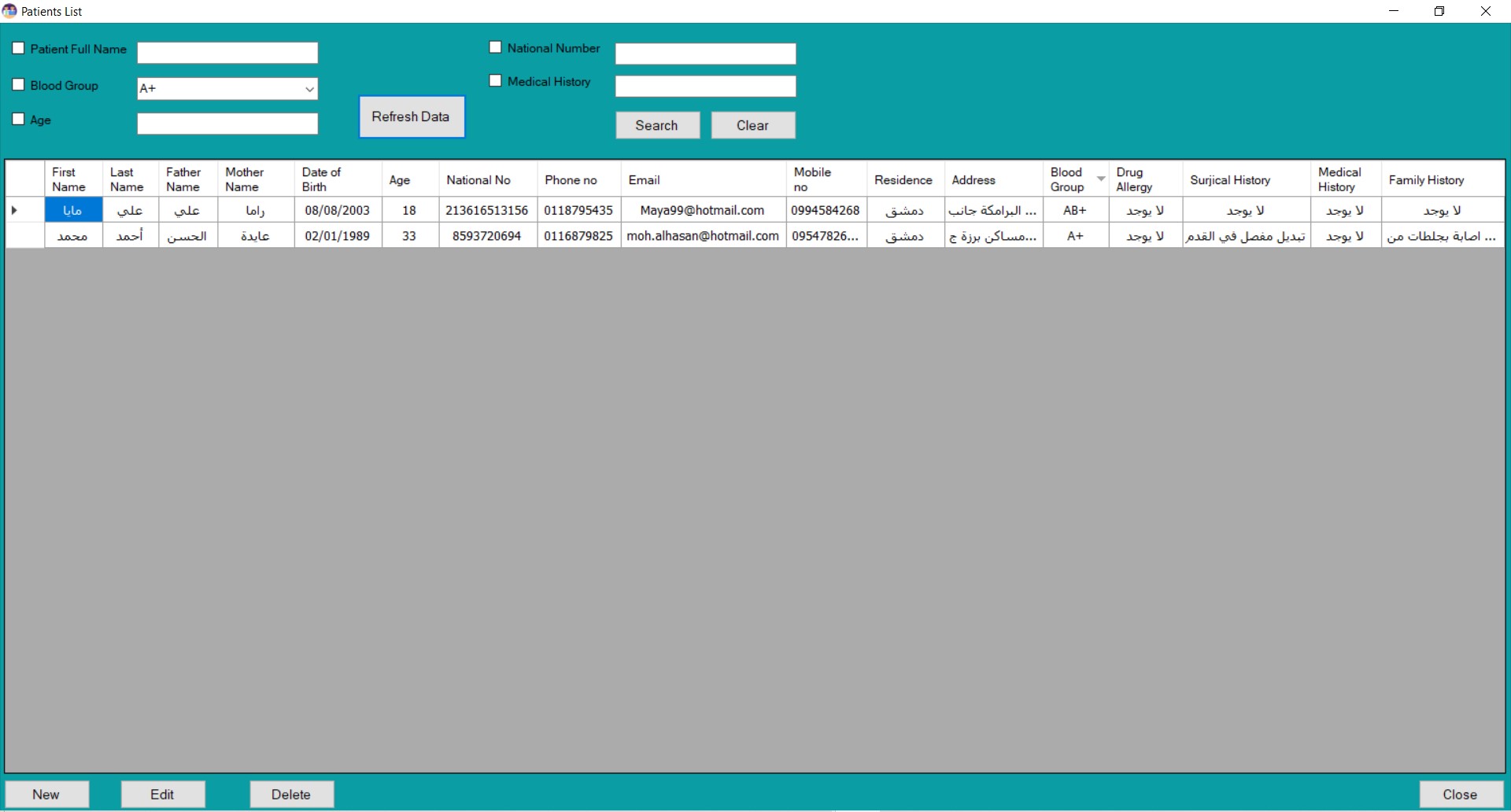

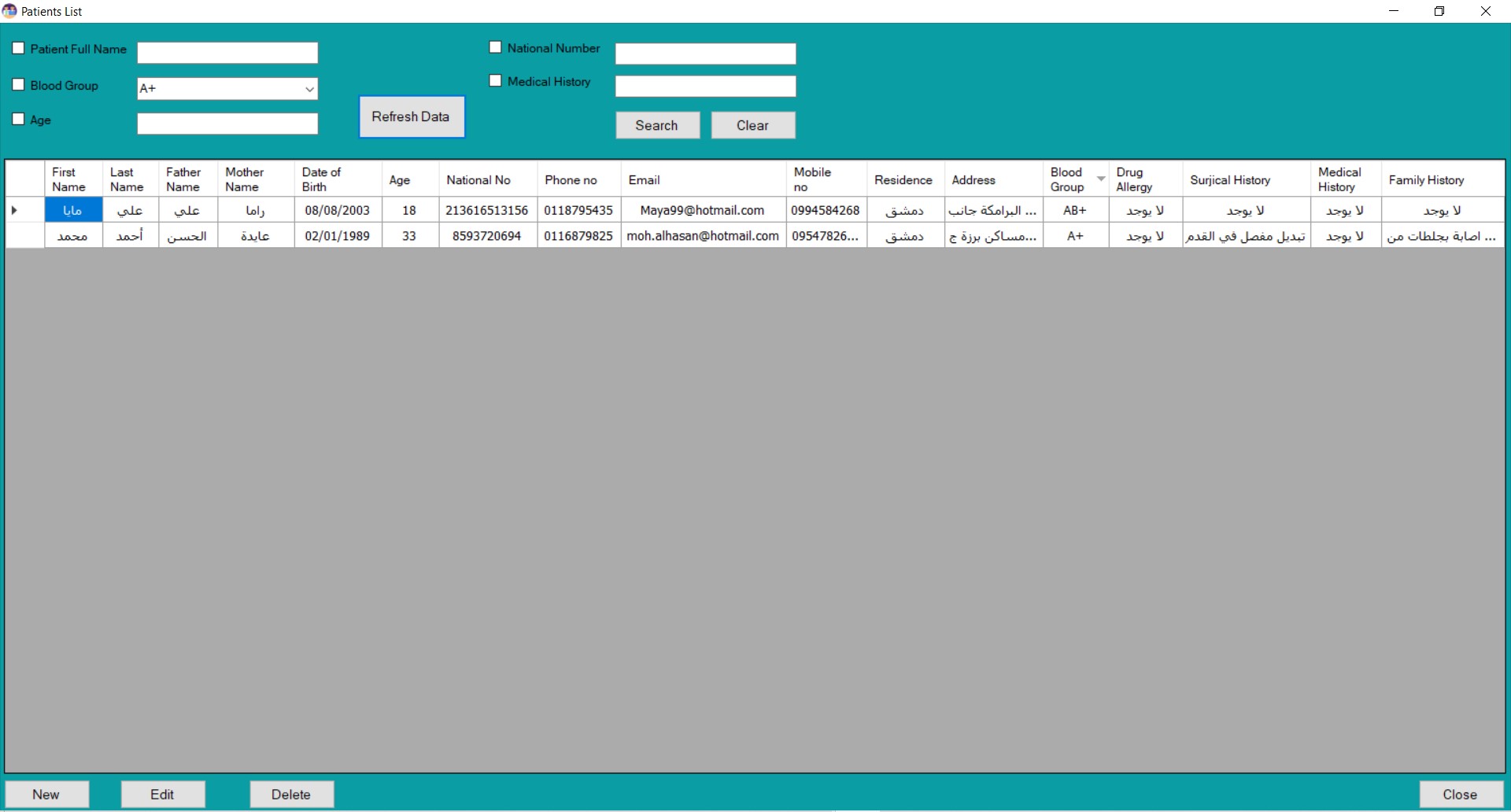

Through these three windows, all patient data is accessed.

When entering the Patient Visits tab, the interface for querying their data appears, as Figure (21) shows a set of previously entered data and the available query methods within the interface. |

|

| |

|

|

|

| Figure 21: The interface responsible for querying and displaying patient data. |

|

| |

|

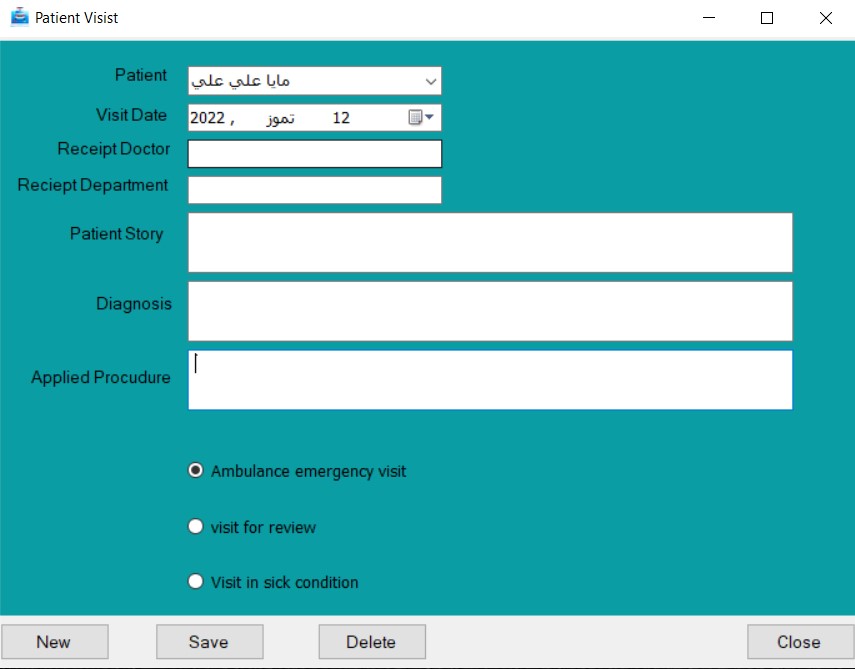

| When entering the (New) button, the interface for entering patient visits appears, in which we can enter a visit for a patient registered with us in the database previously. Also, we can perform several operations such as modifying and querying the patient based on several variables, as shown in Figure (22). |

|

| |

|

|

|

| Figure 22: The interface responsible for entering patient visit data. |

|

| |

|

| Upon entering the second tab (Patient Information), the interface shown in Figure (23) appears, through which patient data can be inquired, as many operations can be performed such as adding or deleting a patient and inquiring about patients through many variables. |

|

| |

|

|

|

| Figure 23: The interface responsible for querying and displaying patient data. |

|

| |

|

The interfaces in the patient visits section are divided into two parts, personal data, and medical data.

Figure (24) shows us the interface for entering the information on the patient's data. |

|

| |

|

|

|

| Figure 24: Interface for entering patient data. |

|

| |

|

| Next comes the interface for entering the patient's medical data, shown in Figure (25). |

|

| |

|

|

|

| Figure 25: Interface for entering medical patient |

|

| |

|

| In the third tab in the program (Emergency), the interface responsible for displaying emergency data that was previously entered within the patient's medical data entry interface appears, as shown in Figure (26). |

|

|

|

| Figure (26) The interface responsible for displaying medical data in emergencies. |

|

| |

|

Second: discussion

Regarding the system's ability to detect faces, the results were good, as the system was able to accurately identify the faces that were entered into the program, due to the support of the detection technology with several algorithms at the same time.

About face recognition technology, regarding the preparation of training samples: the results were also satisfactory, but there were some errors during sampling, so there is a shortage in the number of samples that the follower was asked to take, and this is caused by a delay in the synchronization of commands.

Regarding the system's ability to accurately recognize faces in front of the camera, there were many errors in the recognition process, and these errors were caused by using a small number of images to train the model, as only 30 images were used for each person.

The remaining recognition accuracy errors resulted from re-adding new data to the network, in case a new patient was added. Therefore, the best solution was to retrain the network on the entire data from the beginning each time a new patient was added, but this is considered a major problem if there is a large amount of data. big data.

All these reasons have led to errors and overlaps in the face recognition process.

Regarding the ability of the database to store and query new information, the results were satisfactory, but there is a problem with displaying the information in the query interface, as the user needs to place the cursor over the field to display the information when the amount of information in the field is large, and there is not enough space Field to view independently and directly. |

|

| |

|

| 4. Conclusion and recommendations |

|

In the end, and as it was shown to us previously, a smart system was created to support health facilities, in which a medical database for patients was placed, linked to a special system for recognizing the patient’s face if the patient suffers an injury that prevents him from speaking or speaking about his identity or what his health problems are, and an interface was added Emergency (ambulance) to display the patient's emergency data, which could be a reason for his survival from death, in which a wrong diagnosis was given to him that could lead to his life.

About recommendations, we recommend several suggestions for the future development of the system

Adding an automated diagnostic system to detect cancer, to support the system in the future.

Adding other patterns to identify patients such as (recognition through the cornea of the eye, and recognition through the fingerprint).

Adding a new interface in the database based on a diagnosis of diseases of the heart, lung, and liver, where medical parameters are measured in the patient's body, and appropriate diagnosis is given with medical advice on the severity of the disease.

Adding a database for hospital workers (nurses, doctors, etc.) to organize and arrange the data in the best possible way. |

|

| |

|

| 5. References. |

|

1. Janowak, Christopher F., Suresh K. Agarwal, and Ben L. Zarzaur. "What's in a Name? Provider Perception of Injured John Doe Patients." journal of surgical research 238 (2019): 218-223.

2. Marquetand, Justus, et al. "Delirium in trauma patients: a 1-year prospective cohort study of 2026 patients." European Journal of Trauma and Emergency Surgery (2022): 1-8.

3. Kiymaz, Dilek, and Zeliha Koç. "Identification of factors which affect the tendency towards and attitudes of emergency unit nurses to make medical errors." Journal of clinical nursing 27.5-6 (2018): 1160-1169.

4. Masud, M., Muhammad, G., Alhumyani, H., Alshamrani, S. S., Cheikhrouhou, O., Ibrahim, S., & Hossain, M. S "Deep learning-based intelligent face recognition in IoT-cloud environment." Computer Communications 152 (2020): 215-222.

5. Verma, V. K., Kansal, V., & Bhatnagar, P. (2020, September). Patient Identification using Facial Recognition. In 2020 International Conference on Futuristic Technologies in Control Systems & Renewable Energy (ICFCR) (pp. 1-7). IEEE.

6. Mohammed Hazim Alkawaz, TuerxunWaili, Syaza Marisa binti Adna. Augmented Reality for Patient Information using Face Recognition and Cloud Computing. International Journal on Perceptive and Cognitive Computing (IJPCC) Vol 6, Issue 1 (2020).

7. Viola, Paul, and Michael Jones. "Rapid object detection using a boosted cascade of simple features." Proceedings of the 2001 IEEE computer society conference on computer vision and pattern recognition. CVPR 2001. Vol. 1. Ieee, 2001.

8. Barnouti, Nawaf Hazim, Mohanad Hazim Nsaif Al-Mayyahi, and Sinan Sameer Mahmood Al-Dabbagh. "Real-Time Face Tracking and Recognition System Using Kanade-Lucas-Tomasi and Two-Dimensional Principal Component Analysis." 2018 International Conference on Advanced Science and Engineering (ICOASE). IEEE, 2018.

9. Anand, R., et al. "Face recognition and classification using GoogleNET architecture." Soft Computing for Problem Solving: SocProS 2018, Volume 1. Springer Singapore, 2020.

10. Turk, Matthew A., and Alex P. Pentland. "Face recognition using eigenfaces." Proceedings. 1991 IEEE computer society conference on computer vision and pattern recognition. IEEE Computer Society, 1991. |

|

| |

|

| |

|

| |

|

|